The computer mouse was one of the most innovative advanced inventions for inputing data and instructions to a computer. It was a stroke of genius that enabled the acceleration of the dominance of personal computers in our lives. But, like all things, the mouse is almost dead.

The computer mouse was one of the most innovative advanced inventions for inputing data and instructions to a computer. It was a stroke of genius that enabled the acceleration of the dominance of personal computers in our lives. But, like all things, the mouse is almost dead.Prior to the mouse, one had to remember the entire command lexicon of the operating system, and then type in command with the appropriate switches and parameters. UNIX gurus still do this today, but they are born on a different planet of nerdom. I know, because I am one of them. The mouse enabled one to drop down one of twenty menus, fan out the sub-menus and make a selection. The mouse was a precision instrument, and the need for a scroll wheel was proof of the need to go through a plethora of menus.

The thin edge of the wedge for the death knell of the mouse was the iPhone. The thumb and forefinger became the chief method of selection of menu items. The iPad and plethora of smart phones sealed the deal for the death of the mouse. This is a trend that isn't going away anytime soon. As a matter of fact, there is now a phone that is your laptop.

So what does this mean for UX or User Experience. We have to be all thumbs -- or mouseless. This is a huge challenge.

A thumb-driven menu takes up a lot of screen real estate. And it is a mortal sin to give up functionality, just because you are using an application or a web page on your portable device. What is now needed is extreme UIX techniques, especially when it comes to menus.

We have to ditch the massive hierarchical menu model and be a lot smarter. Gone are the days when all you had to do was add the following to your code:

MenuItem *myMenuItemFile = new MenuItem(S"&File"); MenuItem *myMenuItemNew = new MenuItem(S"&New");

Tests in UX show that there are definite hot spots on the application or web screen where the human eye is drawn to first. Humans are highly adaptable and quickly settle into patterns that reflect brain function. Wireframes and testing shows that users look at screens in an "F" pattern. The first thing we look at is the horizontal bar of the F. In other words, we look at the very first bit of content. This does not include or mean that we look at the little menu items at the top of the page. It doesn't take long for our subconscious mind to figure out that those are bitty unimportant menu items that aren't necessary until we really need them.

If we are designing a User Experience that is engaging, we should let the content rule on the top bar of the F. After all content is king. People don't come for the menus.

However if actions are required on your web page or applications, the menu is an important function. So, original web designers got it right when putting a menu on the left hand side of the page. Thousands of views of a screen have taught our minds to look there.

As to the menu itself, we do not have the luxury of putting a gazillion menu items, drop down menus filled with options and choices galore on a menu. Even the concept of displaying only the menus you need is not productive in a thumb-driven environment, because the user doesn't know what she or he is going to need until they use it.

Psychologists tell us that humans are best with 4 to seven choices. So in Extreme UX, that is all the top menu or main menu will have. I can hear the gasps now. Imagine a program like Microsoft Word with only four top menu items. You can't, because Word is written by dinosaurs, exploiting the last bit of the 1980's that they can wring out for monetary gain.

So how would an extreme programming do it? With brilliance and envelope-pushing code. I would like to introduce a term to you: "Application Use Lifecycle". Huh?

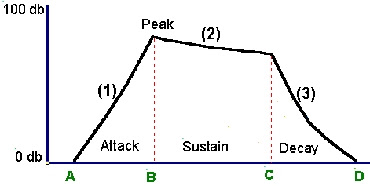

Just like software development has a life cycle, the use of an application has a life cycle. When an application is invoked, and you are starting a new output of that application, you require a very different set of commands than if you open an object that has already had work done on it in that application.

Mapping out the stages of the Application Use Lifecycle will give you a good idea of what menu items are need when. The next step is to write some supervisor code to figure out where you are in the Application Use Lifecycle. By determining this, you will know what menu items are appropriate for that use.

If you want a tutorial on how to do this, you will have to wait for my book to come out on this -- or you will have to hire me to teach your programmers. However, I can guarantee you that with the death of the mouse, you will have to adopt something like this to accommodate the thumb-driven menus.

There are positives with the death of a mouse. If you see a dead mouse, then you know that it is safe to go ahead and score the cheese.

More on this topic to come.