A New Branch of Artificial Intelligence Mathematics Is Needed For Artificial Neural Networks

Isaac Newton described himself as a "natural philosopher". He did experiments in motion and gravity by rolling balls down inclines and measuring and extrapolating the results. He was acquainted with Archimedes of Syracuse's Method of Exhaustion to calculate the area inside of a circle. He studied the works of French mathematician Pierre de Fermat and his work with tangents. He was willing to work with infinite series and alternate forms of expressing them. He gravitated towards the idea (sorry for the pun, it it is fitting, so to speak), of the infinitesimal. He determined the area under a curve by extrapolating it from the rate of change of it, and invented calculus. Leibniz also invented calculus independently of Newton, and he too, introduced notation that made the concepts understandable and calculable.

It was Thomas Bayes who defined Bayesian inference and rules of probability. Although he developed some of the ideas in relation to proving the existence of a divine being, his formulas have been co-opted as the basis of machine learning.

The process repeated itself again with George Boole, who created a representational language and branch of mathematical logic called Boolean Algebra that is the basis of the information age.

Mathematics is a universal language with its own abstract symbology that describes concepts, observations, events, predictions and values based on numbers. The whole shebang is independent of spoken cultural communications and human experience. It is the purest form of description in most concise manner possible.

Those who are bound by the strictures of conventional thinking have the idea that almost everything that we know about mathematics has been described or discovered. I beg to differ.

The very-recently invented mathematical field of topological modularity had to used to solve Fermat's last theorem https://en.wikipedia.org/wiki/Fermat%27s_Last_Theorem There are new discoveries almost every day in mathematics, and the velocity of discovery will increase.

It is my feeling that the field of mathematics, as used to describe how thing work on earth and the universe is evolving to be a more precise way of stating imprecise events -- which happens to be the macro human experience on earth, and may also be the static of quantum dynamics.

The reason that I bring this up, is that I just read a piece about DeepMind - Google's new AI acquisition. It just beat the European Go champion five times in a row. Go is an ancient Chinese board game. If you are thinking "So What?", I'd like to take this opportunity to point out that the number of possible permutations and combinations in the game Go, outnumber the number of atoms in the universe. Obviously this was not a brute force solution.

The way that DeepMind created this machine, was to combine search trees with deep neural networks and reinforcement learning. The program would play games across 50 computers and would learn with each game. This is how a journalist John Naughton from the Guardian described what was happening:

The really significant thing about AlphaGo is that it (and its creators) cannot explain its moves. And yet it plays a very difficult game expertly. So it’s displaying a capability eerily similar to what we call intuition – “knowledge obtained without conscious reasoning”. Up to now, we have regarded that as an exclusively human prerogative. It’s what Newton was on about when he wrote “Hypotheses non fingo” in the second edition of his Principia: “I don’t make hypotheses,” he’s saying, “I just know.”

But if AlphaGo really is a demonstration that machines could be intuitive, then we have definitely crossed a Rubicon of some kind. For intuition is a slippery idea and until now we have thought about it exclusively in human terms. Because Newton was a genius, we’re prepared to take him at his word, just as we are inclined to trust the intuition of a mother who thinks there’s something wrong with her child or the suspicion one has that a particular individual is not telling the truth. Link: http://www.theguardian.com/commentisfree/2016/jan/31/google-alphago-deepmind-artificial-intelligence-intuititive

There is something deep in me that rebels at the thought of intuition at this stage of progress in Artificial Intelligence. I think that intuition, as well as artificial consciousness may eventually be built with massively parallel massive neural networks, but we are not there yet. We haven't crossed the Rubicon as far as that is concerned.

What we really need is new branch of mathematics to describe what goes on in an artificial neural network. We need a new Fermat, Leibnitz, Boole or Newton to start playing with the concepts and logic states of neural networks and synthesize the universal language, theories and concepts that will describe the inner workings of a massively parallel artificial neural network.

This new field could start anywhere. The math of an individual neuron is completely understood. We know the basic arithmetic that multiplies weights with inputs and sums them, We understand how to use a sigmoid activation function or a rectified linear function to fire activation of the artificial network. We understand how to use back propagation, gradient descent and a variety of other tools to adjust the weights and thresholds to a more correct overall response. We even understand how to put the neurons into a Cartesian coordinate matrix and do transformation matrices. But that is as far it goes.

We cannot define an overall function of the entire field in mathematical terms. We cannot define the field effects coupled to the inner workings and relationships of the neural networks to specific values and outcomes. We cannot representationally take the macro function that neural networks solve, and couple them to other macro functions and have useful computational ability from a theoretical or a high level point of view.

The time has come. We need some serious work on the mathematics of Artificial Intelligence. We need to be able to describe what the nets have learned and be able to replicate that with new nets. We need a new language, a new understanding and a new framework using this branch of mathematics. It is this field that will prevent a technology singularity.

The Ultimate Cataclysmic Computer Virus That's Coming - The Invisible Apocalypse

Way back in March of 1999, the Melissa virus was so virulent, that it forced Microsoft and other Fortune 500 computers to completely turn off email so that it could be quarantined. The Mydoom worm infected over a quarter of a million computers in a single day in January 2004. The ILOVEYOU virus was just as bad. A virus worm called Storm became active in 2007 and infected over 50 million computers. At one point Storm was responsible for 20% of the internet's email. This was the magnitude of the virus threats of the past. Nowadays there is a shrinking habitat because most people have antivirus software.

The website AV-Compartives.org measures the efficacy of antivirus software, and as a whole, the industry is pretty good at winning the battle of virus and malware protection. Here is their latest chart on the performance of various players in the field. It measures their efficiency at detecting threats. There are just a very few players at below 95% detection rate. It seems that virus infection affects mostly those who aren't careful or knowledgeable about intrusion and infection threats.

Viruses piggyback from other computers and enter your computer under false pretenses. Anti-virus code works in two ways. It first tries to match code from a library of known bad actors. Then it uses heuristics to try and identify malicious code that it doesn't know about. Malicious code is code that executable -- binary or byte code instructions to the CPU as compared to say photos or files which do not have these coherent binary instructions in them.

Viruses now have to come in from the exterior, and when you have programs looking at every packet received, the bad guys have to try and trick you to load the viruses with links in emails or by tricking you to visit malicious sites where code is injected via the browser. As such, it is possible to keep the viruses at bay most of the time.

But we are due for a huge paradigm shift, and an ultimate, cataclysmic computer virus is coming, and its emergence will be invisible to the current generation of anti-virus programs. It will be the enemy within. And it will reside in the brains of the computer -- the artificial intelligence component of the machine. Let me explain.

Artificial intelligence programs which rely on artificial neural networks consists of small units called neurons. Each neuron is a simple thing that takes one or more inputs, multiplies it by a weight, and does the same to a bias. It then sums the values and the sum goes through an activation function to determine if the neuron fires. These neurons are arranged in layers and matrices, and the layers feed successive layers in the network. In the learning phase, the weights of the inputs are adjusted through back propagation until that machine "knows" the right response for the inputs.

In today's programs, the layers are monolithic matrices that usually live in a program that resides in memory when the AI program is fired up. That paradigm is a simple paradigm and as the networks grow and grow, that model of a discrete program in memory will become outmoded. Even with the advances of Moores Law, if an artificial neural network grew to millions of neurons, they all cannot be kept in active memory.

I myself have built an artificial intelligence framework whereby I use object oriented programming and serialization for the neural networks. What this means is that each neuron is an object in the computer programming sense. Each layer is also an object in memory, each feature map (which is a sub layer sort-of, in convolutional neural networks) is also an object containing neurons. The axons which hold the values from the outputs of neurons are objects as well. When they are not being used, they are serialized, frozen in time with their values, and written to disk, to be resurrected when needed. They fire up when needed, just like in a biological brain. The rest of the time, they quiescent little blobs of files sitting on the disk doing nothing and looking like nothing. These things would be the ticking time bomb that would unleash chaos.

These types of Artificial Neural Networks are able to clone themselves, and will be able to retrain parts of themselves to continuously improve their capabilities. I see the day, when one will install trained AI nets instead of software for many functions. And there is the vulnerability.

An AI network can be trained to do anything. Suppose one trained a neural network to generate malicious code among other more innocent functions. It would create the invisible apocalypse. The code would be created from a series of simple neural nets. One cannot tell what neural nets do by examining them. There would be no code coming from external sources. The neural nets that create the code could be serialized as harmless bits and bytes of a program object whose function is incapable of being determined until you ran those neural nets AND monitored the output. The number of neurons in the neural nets would be variable because of synaptic pruning, recurrent value propagation, genetic learning and various other self-improvement algorithms that throws up and sometimes throws out neurons, layers and feature maps.

This would be the most clever and devious virus of all time. It would be virtually undetectable, and synthesized by the artificial intelligence of the machine inside the machine. Stopping it would be impossible.

So Elon Musk and Stephen Hawking would be right to fear artificial intelligence -- especially if it were subverted to create an AI virus internally without ever being discovered until it started wreaking destruction.

That day is coming. I'm sure that I could turn out a neural network to write a virus with today's technology. Viruses are simple things that cause great harm. A complex AI network could generate them surreptitiously, hold them back until needed and strike strategically to cause the most damage. This is something that security companies should be thinking about now.

Google Translation Fail - An Obituary

I have friends who live in various places around the world. One of my friends from France had a relative who died. I googled for the obituary. Since is was on a server in France, and I operate in English, Google Chrome automagically decided to translate the obituary for me. Here is the result:

you are sad to announce the death Geneviève ******* occurred December 16, at the age of 84. The worship of thanksgiving will be celebrated Tuesday, December 18, at 15 am at the Church of Temple Prostestante Unie de France Condé-sur-Noireau. According to his wishes, the collection will be done without his presence. That natural flowers. It will not be sent invitations this opinion in its stead. The family thanks the people who will be associated with his sentence. Mrs. Florence ****, 303, rue de Paris, Flers

French should be an easy language to translate it. If I can speak French (and I can), any reasonably trained computer program should be able to understand and translate. And Google is the best of the best, and yet it still can't get it right. It didn't know context. It didn't know it was an obituary. It didn't even know that the subject was a woman. It confuses gender more than once. The most intriguing part is that the poor 84 year old woman appears to have been sentenced to death, according to the second last sentence in the paragraph, and the family seems happy and filled with gratitude over the death sentence.

This just proves the old adage:

If the translator is a man, HE translates.

If the translator is a woman, SHE translates.

If the translator is a computer, IT translates.

If the translator is either a man or a woman, S/HE translates.

Whether the translator is a man, a woman or a computer, S/H/IT translates.

you are sad to announce the death Geneviève ******* occurred December 16, at the age of 84. The worship of thanksgiving will be celebrated Tuesday, December 18, at 15 am at the Church of Temple Prostestante Unie de France Condé-sur-Noireau. According to his wishes, the collection will be done without his presence. That natural flowers. It will not be sent invitations this opinion in its stead. The family thanks the people who will be associated with his sentence. Mrs. Florence ****, 303, rue de Paris, Flers

French should be an easy language to translate it. If I can speak French (and I can), any reasonably trained computer program should be able to understand and translate. And Google is the best of the best, and yet it still can't get it right. It didn't know context. It didn't know it was an obituary. It didn't even know that the subject was a woman. It confuses gender more than once. The most intriguing part is that the poor 84 year old woman appears to have been sentenced to death, according to the second last sentence in the paragraph, and the family seems happy and filled with gratitude over the death sentence.

This just proves the old adage:

If the translator is a man, HE translates.

If the translator is a woman, SHE translates.

If the translator is a computer, IT translates.

If the translator is either a man or a woman, S/HE translates.

Whether the translator is a man, a woman or a computer, S/H/IT translates.

Creating A Technology Singularity In Three Easy Paradigms

If you are only vaguely aware of what a technological singularity is, let me give you a short refresher and quote Wikipedia. "A computer, network, or robot would theoretically be capable of recursive self-improvement (redesigning itself), or of designing and building computers or robots better than itself on its own. Repetitions of this cycle would likely result in a runaway effect – an intelligence explosion – where smart machines design successive generations of increasingly powerful machines, creating intelligence far exceeding human intellectual capacity and control. Because the capabilities of such a superintelligence may be impossible for a human to comprehend, the technological singularity is the point beyond which events may become unpredictable or even unfathomable to human intelligence."

Quoting Wikipedia again on recursive self improvement: "Recursive self-improvement is the speculative ability of a strong artificial intelligence computer program to program its own software, recursively. This is sometimes also referred to as Seed AI because if an AI were created with engineering capabilities that matched or surpassed those of its human creators, it would have the potential to autonomously improve the design of its constituent software and hardware. Having undergone these improvements, it would then be better able to find ways of optimizing its structure and improving its abilities further. It is speculated that over many iterations, such an AI would far surpass human cognitive abilities."

So the bottom line is that a Seed AI would start either smartening itself up, or replicating itself and learning everything that it comes in contact with. And its library is the entire world if that Seed AI is connected to the internet.

We do have a biological model for replicating intelligence and it is the gene. Richard Dawkins has stated that in the case of the animals, for example an elephant, a gene has to build an entire elephant to propagate itself. A human has to build another human so that it has a brain capable of intelligence. Not intelligence itself, mind you, but a capability (sometimes, for some people). Here is the difference. Suppose that we have children, and our children are born with the intelligence that we, the parents have gleaned over our life time. And suppose it repeated itself cumulatively. Their children would have their accumulated intelligence plus ours. And so on. In just a few generations, the great grandparents would seem like primitive natives with a limited range of knowledge of how the world, the universe and everything operates. Unfortunately, intelligence is not transmitted genetically -- only the capability for intelligence (in some cases).

There is, however, some intelligence or know-how that is genetically transmitted. Animals and bugs have some sort of software or firmware built into them. Insects know how to fly perfectly after hatching and know enough to avoid a swat. Mosquitoes know how to track humans for blood. Leafcutter ants know enough to chop leaves and ferment them to grow fungus for food without being taught. Animals are prepared with all the BIOS firmware that they need to operate their lives, and they pass it on. I suspect that if humans were to continuously evolve according to Darwinian evolution, we just might pass on intelligence, but that information has been left out of our genetic code. Instead we have the ability to learn and that's it. If we crack the genetic code of how embedded behaviors are passed on in say insects, and apply that to man, we could in fact win the Intelligence Arms Race between humans and computers. The limitation would be the size of the human genome in terms of carrying coded information.

But Darwinian evolution is dead in the cyber world. In the biological world, the penalty for being wrong is death. If you evolved towards a dead end failure, you too would die and so would your potential progeny. In the virtual world, since cycles and generations are arbitrary, failure has no consequences. I myself have generated thousands, if not millions of neural nets only to throw them out when the dumb things were too thick to learn (wrong number of layers, wrong starting values for weights and biases, incomplete or dirty data for training sets, etc, etc). But there are lessons to be learned from mimicking Nature. One of the lessons to be learned, is how biological brains work. More on this a bit later.

So, if a technological singularity is possible, I would like to start building the Seed AI necessary for it? Why? Why do people climb Everest? I am incorrigibly curious. As a child, I stuck a knife into an electrical socket to see what would happen. I could not believe the total unpleasantness of the electric shock that I got. Curiosity killed the cat, but Satisfaction brought him back. I did go on to electrical engineering, proving that Freudian theory is bunk and aversion therapy doesn't work. So without further ado, I would like to present the creation of a Technological Singularity in Three Easy Paradigms.

Paradigm One ~ Perturbation: Create supervisory neural nets to observe or create a perturbation in an existing Artificial Neural Network. The perturbation could be anything. It could be a prime number buried within the string of the resultant calculation of gradient descent. (Artificial neurons generate long strings of numbers behind a decimal place.) It could be an input that throws a null type exception. It could be a perfect sigmoid function value of 1.000 in an activation function. It could be anything, but you need a perturbation seed recognized by the supervisory circuit.

Paradigm Two ~ Instantiation & Linking : Once the supervisory circuit recognizes the perturbation, it goes into the Instantiation & Linking mode. When I homebrewed my own AI, I went counter to convention ANN (Artificial Neural Net) programming. I went totally object oriented. Each neuron and/or perceptron was an object. Each layer was an object with the neuron. The axons, storing the outputs of the previous layers were objects. Then I made controllers with many modes and methods of configuration, and then I could instantiate a brand new AI machine or a part of another one just by calling the constructor of the controller with some configuration parameters.

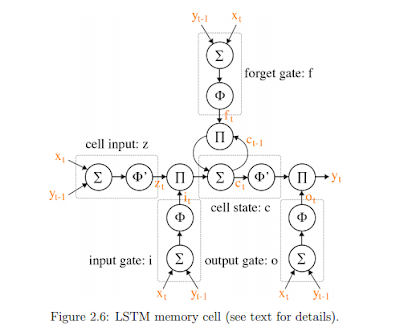

So, once a perturbation was recognized, I would throw up a new ANN. Its inputs would be either random, or according to a fuzzy rules engine. The fuzzy rules engine would be akin the hypothalamus in the brain that creates chemo-receptors and hormones and such. Not everything is a neural net, but everything is activated by neural nets. With current research in ANNs, you could even have a Long Short Term Memory neural network (google the academic papers) remembering inputs that just don't fit into what the machine knows, and feed those into the newly made network. Or you could save those stimuli inputs for a new neural network to come up with a completely different function.

You also create links from other networks. Creative knowledge is synthesized by linking two seemingly separate things to come up with a third. A good example is Kekule's dream of a snake eating its own tail and that was the basis for cyclical hydrocarbons and organic chemistry. Biological brains work and learning by linking. An artificial neural network can simulate linkage by inputting and exciting layers of different neural networks with a layer of something completely different like the diagram below:

Paradigm Three ~ Learning: So now that you have perturbed the network to throw up some new neural nets and you have done the linkages, to can train it by back propagation to learn, calculate or recognize stuff. It could be stuff from the Long Short Term Memory net. It could be stuff gleaned from crawling the internet, or by plumbing the depths of data in its own file system. It could be anything. Here's the good part. Suppose that the new neural nets are dumber than doorposts. Suppose they can't learn beyond a reasonable threshold of exactitude. So what do you do? They could be re-purposed, or simply thrown out. Death of neurons means nothing to a machine. To a human, we supposedly kill 10,000 with every drink of alcohol, and still have a pile left. The fact that heavy drinkers turn into slobbering idiots in their old age won't happen with machines, because unlike humans, they can make more neurons.

So once you have an intelligent machine, and it has access to the cloud, it itself becomes a virus. Every programming language has a CLONE() function. If the neural networks are objects in memory that can be serialized, then the whole shebang can be cloned. If you are not into programming, what the previous sentence means, is that with Object Oriented Programming, the Objects are held in memory. For example you could have an object called "bike". Bike has several methods like Bike.getColor() or Bike.getNumberOfGears(). Even though these objects are in dynamic memory, they can be frozen into a file on the disk and resurrected in their previous state. So if you had a blue bike and did Bike.setColor("red") and serialized your bike, it would remember it was red when you brought it back to life in memory.

Having a neural network machine that could clone itself and pass on its intelligence -- well that's the thin edge of the wedge to getting to a Technology Singularity. That's where you start to get Franken computer. That is where you don't know what your machine knows. And with almost every object connected with the Internet of Things and the Internet of Everything, the intelligence in the machine could figure out a way to act and do things in the real world. It could smoke you out of your house by sending a command to your NEST thermostat to boil the air in the house. That's when thinks get exciting and that's would scares Dr. Stephen Hawking. Remember he relies on a machine to speak for him. The last thing that he wants, is that machine to have a mind of its own.

I'd write more on the subject, but I have to go to my software development kit and start writing a perturbation model for my object oriented neural nets. I ain't afraid -- YET!

The Caine Mutiny & Self-Driving Cars

One of my most treasured books is a first edition of Herman Wouk's novel "Caine Mutiny". Published in 1951, it won the Pulitzer Prize and it was on the New York Times Bestseller List for 122 weeks. I was pleasantly surprised to find at this time of me writing this blog posting, that Herman Wouk is still alive and 100 years old.

The movie version of Caine Mutiny starring Humphrey Bogart (pictured above) is pretty cheesy, and didn't give a tenth of the subtext that the book gave.

Briefly, the story takes place during World War II in the Pacific naval theater on an obsolete Destroyer Minesweeper called the USS Caine. Since Wouk himself served on two of these types of ships, and his naval career followed the trajectory of an officer in the book, the level of detail of running a naval warship is stunning and intricately documented.

The story line goes like this. An ineffective, incompetent captain is assigned to the ship. His name is Queeg, and in the movie he is played by Humphrey Bogart. Queeg tries to mask and overcome his incompetence by becoming a martinet, blaming his crew for his mistakes and covering up acts of cowardice in battle. His behavior gets weirder as his commanders dress him down for his mistakes while commanding the Caine.

During a typhoon, an able, but not an intellectual executive officer named Maryk countermands a Queeg order to maintain fleet course that would have sunk the ship. The ship would have floundered by sailing with the wind and sunk in the high waves. Instead Maryk saves the ship by correctly heading into the wind face on to last out the winds and waves using the powerful engines of the destroyer to hold his position in the most stable way possible. As a result of the action, some of the crew is charged with mutiny, and the denouement is the court martial trial.

In the book, the author details that minutia that enables reservists like shoe salesmen, factory workers and college students to conduct naval warfare on a historic scale with competence that rivals the great sea battles such as those fought by John Paul Jones and Lord Admiral Nelson.

So what does this have to do with AI, and self-driving cars? This is a James Burke Connections moment. In the book, the protagonist is a young, rich, immature ensign and former playboy named Willie Keith. Through the course of the book, he matures as a person and rises through the ranks to eventually become the captain of the USS Caine. As a young initiate and a commissioned officer, he is assigned OOD or Officer of the Deck. He learns how to navigate, calculate courses, issue commands that keep the ship and fleet zigzagging to avoid submarine torpedo and maintain a sonar net around the fleet. He is the captain's representative when the captain is not on the bridge.

As OOD, Willie has to sign the log book at the end of his watch, and the first time that he signs it, it is a single notation: "Steaming as before. Willie Seward Keith USNR". This was highly instructive to me as it was a Eureka moment for my thoughts on self-driving cars, and the whole reason why I brought up the Caine Mutiny in the first place.

The author, Herman Wouk was quite accurate in the mundane details of how the ship is run, and what it did while sailing in convoy, and what he had to execute as his own OOD duties when he served about the USS Zane and the USS Southard. The log would record the course that they were sailing, but would not record the zigs, zags, changes of course, the swapping out of positions for the sonar submarine screen with other destroyers in the convoy, and other "housekeeping" details. These were not logged. But the important events were, and the navy would eventually review those logs if something extraordinary happened. The logs would hold lessons for a postmortem analysis.

This was the "aha" moment for me. Self driving cars need to log all of their journeys, all of the time in a temporal database. An explanation of a temporal data base is here, but essentially it records what was true at any given time. But to store redundant information that belongs to the Department of Redundancy Department is not necessary. If you embarked on a trip on the interstate, you would record your start time and your end time. If you didn't stop at a rest stop, or get into an accident, or do anything to impede your progress, that is all that you need to record. You don't have to record a lot of data at every minute. The biggest inference that you can make, would be like the log book of the USS Caine - "Steaming as before.".

But why bother to record anything including the status quo, if you are steaming as before? Why bother keeping logs? Because of the rich knowledge buried in the data. From that small bit of recorded information, you can derive your average velocity. From the collected data of many, many trips by many cars, you can infer a lot of things. You can infer if the weather was bad if it took a time slower than normal and you didn't stop anywhere. You can infer mechanical conditions. You can infer traffic density. You can engineer a lot of features from the trip data.

But the most valuable data gleaned from collecting a lot of logs of every trip that a self-driving car makes, is that you can make a self-driving car a lot smarter, if it and others like it can learn from the mistakes and successful corrective actions of other vehicles that have driven the road.

Driving is a not a complete linear event. Speed varies. Road conditions vary. Random events take place such as an animal runs out in front of the car, or another vehicle cuts you off. If you collected every IRRR (Input, Reaction, Resultant Reaction), you would have quite a library of events that could only improve the driving ability of every autonomous car. Self-driving cars could emulate human drivers. They can get better by more practice and by learning from others.

But lets go one further. In a connected car, the internet is always on. The car is always positionally aware of where it is. Suppose that as you drove down a particular stretch of road, an electronic milestone would download some neural nets into the car that would enhance the car's ability to navigate that particular section of road. And where would that come from? From the collected event logs that not only had the "Steaming as before" data but the data on how other drivers successfully negotiated conditions and hazards on that particular stretch of road.

With storage being incredibly cheap and in the cloud, this sort of continuous data collection and processing would be easy to collect. Many people will point out privacy concerns, but if there was a standard for the neural nets or a library of driving moves for self-driving cars or even a standardized neural net interchange network, only the data itself after it has been processed is required without vehicle identification. As a matter of fact, when the legislation for self driving cars comes in, it should specifically prohibit vehicle identification in the data collection stage.

Self driving cars collecting temporal data can have lots of benefits. With aggregated data, you can have expected MTBF or machine-determined Mean Time Between Failure. The car could drive itself to get serviced when it is required -- usually after it has delivered you to work. And it can book the service appointment on the fly when it reaches a critical juncture.

With the "Steaming as before" logged data collection, the act of getting driven somewhere could and will be automatic, without a thought given to the process of actually getting yourself to your destination. The car will take care of all of the logistics for you.

As for me, to hell with the data. I'm going to invest in a connected car wash specifically to clean self-driving sensors, and have an automated beacon system to lure the autonomous cars in for a wash and wax. They will pop in and out, and continue steaming as before but now much cleaner.

Why I Won't Be Re-Joining The BBC Global Minds Panel

I recently received the following email from BBC Global Minds.

Hi Ken Bodnar,

Thanks for your continued interest and participation in the BBC Global Minds panel.

Thanks for your continued interest and participation in the BBC Global Minds panel.

Here at the BBC, we’re always thinking about how we can make your experience on Global Minds a great one. Therefore we’re introducing a brand new site with a fresh new look and layout.

As part of this we’re changing over our supplier from Vision Critical to eDigitalResearch, and if you’re interested in continuing your membership you’ll need to click on the link below to sign up with the new site.

As part of this we’re changing over our supplier from Vision Critical to eDigitalResearch, and if you’re interested in continuing your membership you’ll need to click on the link below to sign up with the new site.

If the above link doesn't work please copy and paste the following into your browser:

We hope you like the new Global Minds panel, and we’re looking forward to sharing some new surveys and polls with you in the coming weeks.

Please note, if you don’t click on the link and complete the 5 quick questions to sign up then you’ll no longer be a member of the Global Minds panel and won’t be able to give us your important feedback.

Kind Regards,

The BBC Global Minds Team

The BBC Global Minds Team

The BBC Global Minds panel was like a self-forming focus group. Anyone could sign up, and they would periodically have surveys for programming on the BBC. I don't mind giving my opinion, because they are a quality news and programming source.

However, I am not going to follow the direction and re-sign up for Global Minds. I first soured on this type of thing, when they send me an email requesting me to grant them access to my laptop video camera and they would record me as I watched a BBC video. They have to be nuts to thing that I would give permission for them to (1) grant them access to my laptop (2) allow myself to be filmed and (3) not knowing what the end result as to whether the video would be destroyed.

That aside, one would think that they would think highly enough of their focus group panel to at least have their new survey company come with with a plan to migrate their contacts. I am not going to fill out a form for yet another survey company who will own my contact data and who knows what the heck they will do with it. They probably wouldn't sell it, but I don't them from a hole in the ground, and I don't trust them with my contact data. When you read about A-List companies having data breaches, I don't really trust anyone, or believe in the wide promulgation of my contact data and personal details.

When I see that they just have a handful of Twitter followers, methinks that they need more than a new survey company. I understand that they are under siege from the current UK government who doesn't believe in the value of a national broadcaster, but surely they can squeeze a fat-cat do nothing director out, and hire someone to coordinate their social media. Hell, I would be pleased to advise them for free as to how to maximize their content engagement with the world.

So sadly to say, I will not be re-joining the BBC Global Minds Panel.

However, I am not going to follow the direction and re-sign up for Global Minds. I first soured on this type of thing, when they send me an email requesting me to grant them access to my laptop video camera and they would record me as I watched a BBC video. They have to be nuts to thing that I would give permission for them to (1) grant them access to my laptop (2) allow myself to be filmed and (3) not knowing what the end result as to whether the video would be destroyed.

That aside, one would think that they would think highly enough of their focus group panel to at least have their new survey company come with with a plan to migrate their contacts. I am not going to fill out a form for yet another survey company who will own my contact data and who knows what the heck they will do with it. They probably wouldn't sell it, but I don't them from a hole in the ground, and I don't trust them with my contact data. When you read about A-List companies having data breaches, I don't really trust anyone, or believe in the wide promulgation of my contact data and personal details.

When I see that they just have a handful of Twitter followers, methinks that they need more than a new survey company. I understand that they are under siege from the current UK government who doesn't believe in the value of a national broadcaster, but surely they can squeeze a fat-cat do nothing director out, and hire someone to coordinate their social media. Hell, I would be pleased to advise them for free as to how to maximize their content engagement with the world.

So sadly to say, I will not be re-joining the BBC Global Minds Panel.

Performance Analytics Guide To Aviva Premiership Rugby Standings

Every professional sport has its analytics and statistics department because player and team analysis translates into money. Curiously the sport of professional rugby has resisted the applied data science to performance despite the efforts of myself and some others. The owners or coaches simply do not believe that extreme analytics play a part in their view that rugby is essentially a stochastic game that cannot be predicted on a macro level. In the olden days, the coach used to take a seat, sometimes in the stands once the game began. Of course they are wrong if they believe that performance on every level can't be measured and predicted.

Even the simplest of metrics and analyses can benefit a team, or benefit someone betting on a team. I have developed a software package called RugbyMetrics to digitize a game from video so that analytics and data mining can be run on the game record. Here is a screen shot of the capture mode:

The degree of granularity goes right to the player and events. One of the biggest advantages to using analytics, is to use it to determine level of compensation based on ability in terms of peer standings. But the current crop of owners and coaches are leery of using deep-dive analytics.

The field of analytics and the mathematical underpinnings have evolved greatly in the past twenty years. As an example, a mini-tutorial and a prediction for final standings at the end of the 2015-2016 campaign, I will demonstrate the Pythagorean Analytics Won Loss Formula for evaluating team performance and ultimate standings at the end of the season.

In the early parts of the season, as it is now in the Aviva Premiership Rugby season, only 8 matches have been played. The top team, the Saracens have 36 points and the bottom team, the London Irish have 4 points. While this seems like a lot, each win garners 4 points so the chasm in between the bottom and the top doesn't seem that drastic to the casual observer. Let me quote the league rules on how points are amassed:

During Aviva Premiership Rugby points will be awarded as follows:

• 4 points will be awarded for a win

• 2 points will be awarded for a draw

• 1 point will be awarded to a team that loses a match by 7 points or less

• 1 point will be awarded to a team scoring 4 tries or more in a match

In the case of equality at any stage of the Season, positions at that stage of the season shall be determined firstly by the number of wins achieved and then on the basis of match points differential. A Club with a larger number of wins shall be placed higher than a Club with the same number of league points but fewer wins.

If Clubs have equal league points and equal number of wins then a Club with a larger difference between match points "for" and match points "against" shall be placed higher in the Premiership League than a Club with a smaller difference between match points "for" and match points "against".

OK, so you see the way that a team amasses points after the game is played. During the game, the chief method of scoring is called a try where the ball is downed behind the goal line. That gives you five points, and like football, if you kick a conversion, you get 2 extra points. You can also get a penalty kick or have a drop kick and those are worth three points. In the past the points for these various scoring methods have varied.

The current league table looks like this:

As you can see, each team has played 8 games. The Saracens have won them all and the London Irish have won only one. The Sarries, in their first place have amassed a total of 218 points for themselves across the 8 games and have yielded 81, for a difference of 151. In terms of the major scoring of trys, they have made 24 trys and 5 have been scored against them. Impressive. The Chiefs have scored more trys but are in second place. This would indicate that their defense capabilities doesn't match their offense and the 12 trys (compared to the 5 against the Sarries) proves it.

So one would look at the table and say that a few teams still have a shot at winning or getting into the top 4. For example, I admire the Saracens, but I like the Tigers very much, and RugbyMetrics was developed with Bath in mind. So what are their chances? How will it finish when it is all said and done? That's where the predictive power of the Pythagorean Analytics Won Loss Formula.

So what does this Pythago-thing-a-majig do? I am going to talk technical for a minute. If your eyes glaze over, skip this paragraph. Mathematically, the points that a team scores and points scored against a team, are drawn from what is known as independent translated Weibull distributions, In statistics, the Weibull distribution is a continuous probability distribution. It is named after Swedish mathematician Waloddi Weibull. What is a probability distribution? A probability distribution assigns a probability to each measurable subset of the possible outcomes of a procedure of statistical inference. And we are going to infer the win ratio based on how often the team scores and how often they are scored against. That is the end goal of what we are doing. Here is an infographic of the proof of Goals (trys) for and Goals Against are calculated as a Weibull distribution. Through my math trials I have found a proprietary exponential power to calculate the Pythagorean Won Loss formula.

OK, so all of the theory aside, what do the results look like? Who will rise to the top and who will sink to the bottom when it is all said and done?

Currently the standings, early in the season look like this:

| 1 | Saracens |

| 2 | Exeter Chiefs |

| 3 | Harlequins |

| 4 | Leicester Tigers |

| 5 | Northampton Saints |

| 6 | Gloucester Rugby |

| 7 | Sale Sharks |

| 8 | Bath Rugby |

| 9 | Wasps |

| 10 | Worcester Warriors |

| 11 | Newcastle Falcons |

| 12 | London Irish |

When we extrapolate winning performance based on points scored for and against using the Pythagorean Won Loss Formula, the predicted outcome changes the order. The Won/Loss numbers are the decimal places. Using their scoring record, the Sarries are expected to win 86.9 times out of a hundred against their Premiership rivals.

| 1 | Saracens | 0.869224 |

| 2 | Exeter Chiefs | 0.756876 |

| 5 | Northampton Saints | 0.609814 |

| 3 | Harlequins | 0.60633 |

| 4 | Leicester Tigers | 0.563826 |

| 8 | Bath Rugby | 0.517414 |

| 9 | Wasps | 0.505625 |

| 6 | Gloucester Rugby | 0.492264 |

| 7 | Sale Sharks | 0.399586 |

| 10 | Worcester Warriors | 0.357453 |

| 11 | Newcastle Falcons | 0.206142 |

| 12 | London Irish | 0.193901 |

Note that the Northampton Saints jumped from 5th place to 3rd dropping the Harlequins by one, and the Tigers drop to 5th, while the Bath rises to 6th. The top two and the bottom three teams are where they should be. Poor old Gloucester drops to 8th place.

Lets take this analysis a little deeper though. The above Pythagorean Won Loss formula is based on total points. The main offensive ability results in trys worth either 5 or 7 points depending on whether the conversion is made. Luckily we have the stats for trys for and against eliminating the points for drop kicks and penalties. This reflects the upper boundaries of where the team would end up based on try scoring ability. Calculating the Pythagorean Won Loss formula using the try data alone, again gives hope to certain fans.

| 1 | Saracens | 0.943933 |

| 2 | Exeter Chiefs | 0.811483 |

| 5 | Northampton Saints | 0.639505 |

| 8 | Bath Rugby | 0.636054 |

| 3 | Harlequins | 0.585137 |

| 4 | Leicester Tigers | 0.535957 |

| 9 | Wasps | 0.5 |

| 6 | Gloucester Rugby | 0.418684 |

| 10 | Worcester Warriors | 0.349619 |

| 7 | Sale Sharks | 0.32523 |

| 12 | London Irish | 0.212348 |

| 11 | Newcastle Falcons | 0.1612 |

The top three remain the same as the total points analysis. Based on trys alone, Bath Rugby, currently in 8th has the ability to finish in 4th place. Worcester climbs up one over Sale, and the bottom two remain the same.

So if you are a betting man, you have a decent chance of taking this list to Ladbrokes or some other wagering shop, and make a couple of quid on the good old Pythagorean Won Loss formula.

Most of the rest of RugbyMetrics is player-centric for the benefit of measuring individual performance. I predict that the first team that adopts this, and brings home the silverware, will open the flood gates for performance analytics in professional rugby.

AI Risk Radar For Self-Driving Cars

There is a lot in the news with self-driving cars. Google has one. Apple is building one. Mercedes already has a self-driving transport. Even the kid (George Hotz) who carrier unlocked the iPhone and Playstation built himself a self-driving car.

You read about LIDAR systems (Wikipedia: Lidar (also written LIDAR, LiDAR or LADAR) is a remote sensing technology that measures distance by illuminating a target with a laser and analyzing the reflected light. Although thought by some to be an acronym of Light Detection And Ranging, the term lidar was actually created as a portmanteau of "light" and "radar".) and camera systems with real time video analysis, etc.

Several makes and models already partially autonomous functions like parallel parking and lane departure systems that warn you when you leave the lane. Some cars will autonomously steer on a highway when cruise control is on. However what is missing from the whole autonomous driving vehicle is some real brains behind driving.

When a human is driving a car -- especially a human who is a good driver and taught to drive defensively -- they always have a layer of abstraction that I like to call a risk radar turned on.

The risk radar while driving is like a an analysis and environment supervisory circuit that is not directly involved in actually driving the car, but processes meta-data about driving.

For example, a risk radar would look at the outdoor temperature and if it is freezing, it would devote some cycles to see if there is black ice on the road.

A risk radar notices that in a two or more lane highway, if you are are driving in another vehicle's blind spot, you either slow down or speed up.

A risk radar notices that you are in a high deer collision area on the highway, so some extra cycles are devoted to looking for the pair of reflective phosphorescent eyes on the side of the road.

If you had to brake heavily to avoid collision, a risk radar will model the circumstance that caused that extreme corrective action and will be comparing all environmental parameters, conditions and events to watch out for similar situations. When one or more of those risk factors are present, it goes into high alert mode.

A risk radar can actually modify the way that a vehicle is driven. Self-driving cars will have to have sensors like an accelerometers to detect yaw and pitch due to high winds, or hydro-planing on a wet road, Risk radar would note these things.

Risk radar would count cars and notice if the traffic was heavy. Risk radar will notice the speed of travel of other cars and make inferences about it.

Risk radar will have a range of characteristics. Not only will it have a library of risks and the mitigation actions associated with them, but it will also have both supervised and unsupervised machine learning. In machine learning, supervised learning is where the machine is given the correct result so it can self-adjust weights and thresholds to learn risks and risk mitigation actions. By the same token, unsupervised learning is where the machine infers a function from input data (through various algorithms such as clustering etc).

The biggest element of risk radar for self-driving cars, is that it must be time-domain aware. Time-domain awareness means that it must know that certain events and actions follow the arrow of time and may or may not be caused by the preceding state. This is state of cognition with time awareness is a form of artificial consciousness (see the blog entry below), and it important in implementing a high level autonomous decision as to what algorithm will be used to drive the car. For example, if the risk radar warrants it, the car would move into a cautious driving algorithm. If the road was icy, the car would under-steer to prevent skidding. This would require coordination between acceleration and braking that would be different from ordinary driving.

The risk radar necessary for cars would be an evolving paradigm. Cars driving in downtown New York would have different risk points than cars self-driving in Minnesota. Having said that, if there were a standardized paradigm for the interchange of trained neural nets, a GPS waypoint would load the appropriate neural nets into car for the geographic area that it was driving in.

The risk radar for self-driving cars is a necessity before these autonomous vehicles are seen ubiquitously on all streets. It really is a Brave New World, and it is within our grasp.

Subscribe to:

Posts (Atom)